It took me a long time to understand Bayesian statistics. So many angles from which to approach it: the Bayes' Theorem, probability as a degree of belief, Bayesian updating, priors, and posteriors, ... But my favorite angle is the following first principle:

> In Bayesian statistics, model parameters are random variables.

The "model" here can be a simple distribution. The mean of a distribution, the coefficient in logistic regression, the correlation coefficient – all these parameters are variables with a distribution.

Let's follow the implications of the parameters-are-variables premise to its full conclusion:

Parameters are variables.

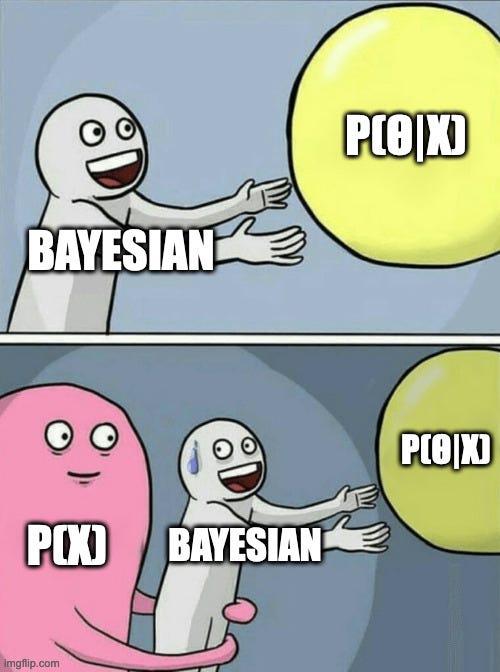

Therefore, modeling means estimating P(θ|X) , the parameter distribution for θ given the data X.

But there is a problem with P(θ|X). It's unclear how parameters given data are distributed. The inverse P(X|θ), the distribution of data given parameters, is more natural to estimate.

Fortunately, a mathematical “trick” can help: Bayes' theorem. The theorem inverses the condition to P(X|θ), which is the good old likelihood function.

Bayes' theorem also involves P(θ), the priori distribution. That's why Bayesians must specify a parameter distribution BEFORE observing data.

Model estimation equals update from prior to posterior.

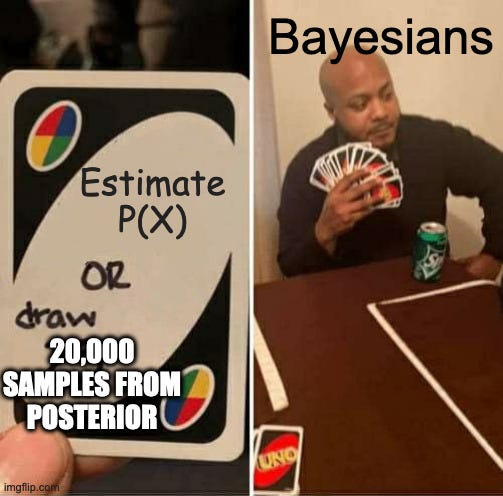

Bayes' theorem also involves the term P(X) called the evidence, which is usually unfeasible to estimate.

So Bayesians usually sample from the posterior. Sampling from the posterior involves techniques such as MCMC.

This makes Bayesian models a bit more computationally intense to estimate.

From this first-principle perspective, Bayesian statistics made a lot more sense to me. It becomes clear why Bayesians have to work with a prior distribution, why estimation can be time-consuming, and why we need the Bayes Theorem.

Bayesian statistics is not just a method - it's a mindset that tells you how to model the world with data. But when I learned about it I always felt too close to the mathematics and models so it took me a long time to understand the big principles behind Bayesian stats.

Bayesian stats is not the only "mindset", there are also frequentist stats, causal inference, supervised machine learning, and many more. That's why I wrote Modeling Mindsets. To understand the big principles and not get lost in the details.

If you've already read "Modeling Mindsets", I would be extremely grateful if you could take a moment to leave a review on Amazon. Your honest feedback helps other readers make informed decisions about the book, and it also helps me as an author.

I don't think I've laughed this much reading an article on Bayesian modeling. This was such a fun read!

Thanks for this article! I'm taking a bayesian statistics course and would to clarify something. Is it true that P(X) is the normalizing constant, and in practice, we can just take the numerator P(X|θ) P(θ) which is proportional to the posterior distribution, and MCMC is used to sample from an unknown form of the posterior distribution. And there is no need to deal with P(X)?