Conformal Prediction is Available in Print 🥳

Introduction To Conformal Prediction With Python is now also available as a print version (in color)! 🎉

What Is Conformal Prediction?

If you haven’t participated in the e-mail course, here is a short primer on conformal prediction:

Conformal prediction is a method for quantifying the uncertainty of machine learning models.

To be more exact: With conformal prediction, you can quantify the uncertainty of predictions made by machine learning models by producing prediction sets or intervals instead of just point predictions.

The basic idea behind conformal prediction is that most ML models already have a sort of uncertainty score, but we have to “calibrate” it to get reliable uncertainty quantification.

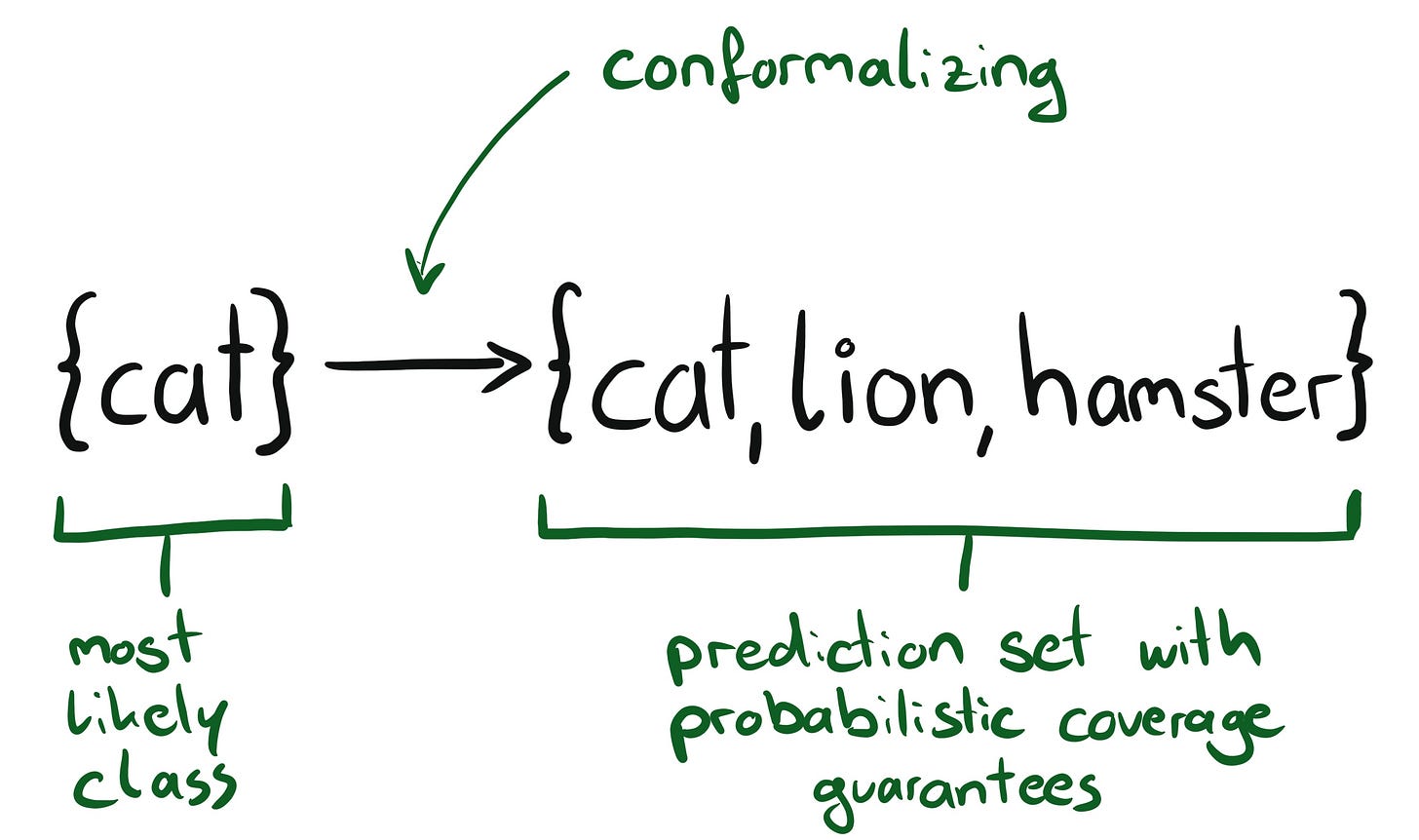

For example, most classifiers already output probabilities for the classes, which indicate how certain the model is for each class. Only that they aren’t actually probabilities, because we usually can’t rely on an output of 0.8 for a class meaning that with 80% probability, the prediction will be of this class.

Based on these probability scores, conformal prediction can produce sets of classes with a guaranteed coverage probability of the true outcome. As a user of conformal prediction, you can say: “I want the classification model to output sets of classes that cover the true outcome with, on average, 90% probability.”

An important step in conformal prediction is to find the right threshold for the probability scores up until which the classes shall be included in the set. This happens on an additional calibration dataset so that the 90% coverage can actually be guaranteed.

This allows you to not only output the model’s best guess, but a set of classes with probability guarantees. Neat.

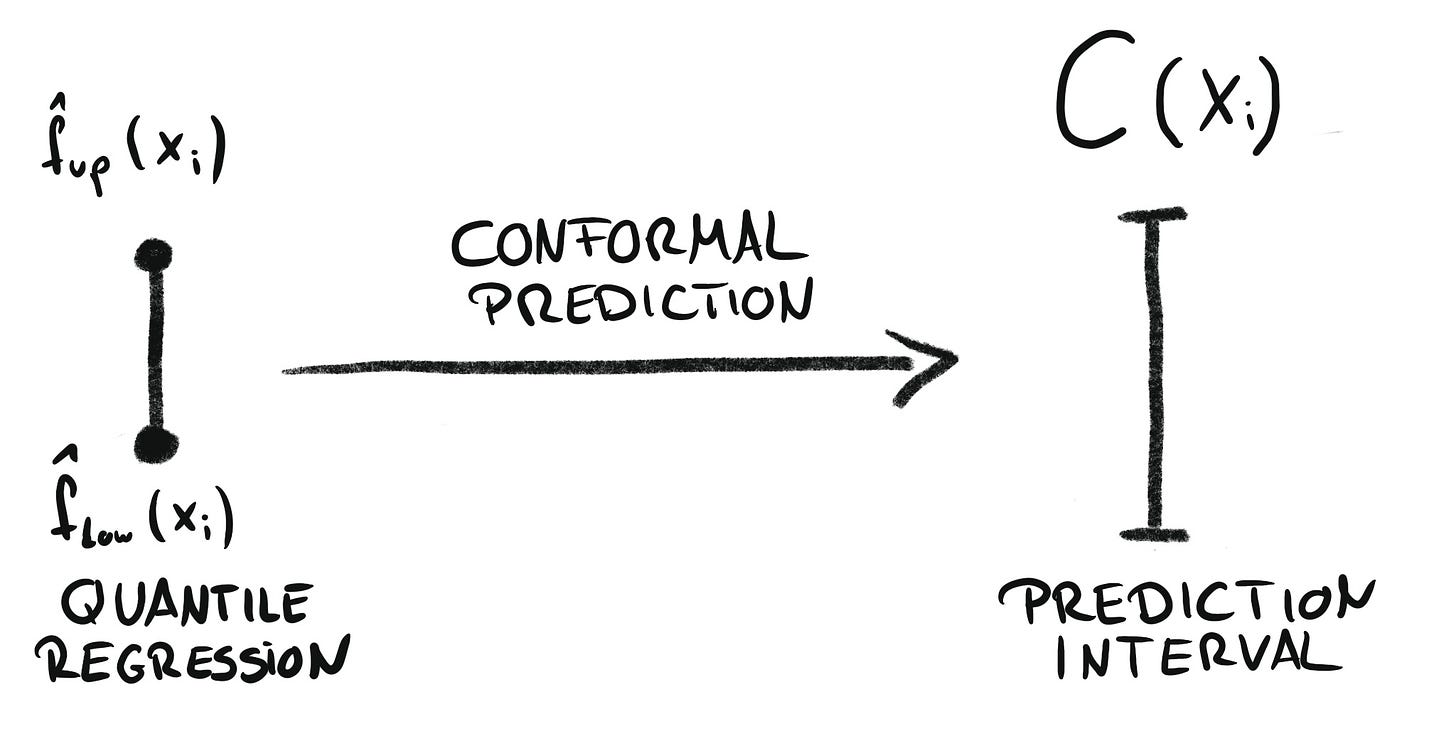

Conformal prediction is not only a way to quantify the uncertainty of classification models, but works for many other tasks as well, such as regression and times series forecasts.

In the case of regression, conformal prediction can turn a point prediction into prediction intervals with guaranteed coverage:

Conformal prediction is an entire universe of uncertainty quantification methods for different machine learning tasks.

Book About Conformal Prediction

But the topic of conformal prediction is still somewhat young and mostly lives in the academic sphere. And the math and statistics behind the method can seem like an entry barrier.

My new book “Introduction to Conformal Prediction With Python” is an entry point into this easy-to-use but theoretically solid uncertainty method. The book contains lots of code examples and intuitive explanations.

If you want to learn a practical and easy-to-use uncertainty quantification method, check out conformal prediction!

This book is a comprehensive guide and resource for anyone who wants to learn how to quantify uncertainty with conformal prediction by using python. Christoph's writing is clear and engaging. He provides practical examples that help readers understand how to apply conformal prediction techniques/concepts to real-world problems.

– Tony Zhang, Data Scientist at Munich Re

I really enjoyed reading the book. The data science and machine learning community needs more people like Christoph Molnar who are able to translate emerging breakthrough research into digestible concepts. I can see this book becoming a key piece in accelerating the rate of adoption of conformal ML.

– Guilherme Del Nero Maia, Principal Data Science at Jabil

Modern statistics can be a difficult topic, but Christoph has managed to make it feel easy, practical, and fun! Reading this book is a great first step towards gaining mastery of conformal prediction and related topics.

– Anastasios Angelopoulos, Researcher at the University of California, Berkeley

If you want to learn a practical and easy-to-use uncertainty quantification method, check out conformal prediction!

There’s also an ebook version available here:

Just ordered the print version. Very excited, thanks!!

Hey Christoph, does your book cover Venn-ABERS predictors?