Feature Selection And Feature Importance: How Are They Related?

Understanding the Differences for Better Machine Learning

How are feature selection and feature importance related? This is a question I came upon often when doing research, but it’s also a practical question when doing machine learning.

tl;dr: Feature selection and feature interpretation are different modeling steps, with different goals. They are, however, complementary and if you get feature selection right, you can boost interpretability.

I’ve done a lot of research in the field of machine learning interpretability, especially about permutation feature importance.

Permutation feature importance: Shuffle a feature, measure performance on test data before and after shuffling. The importance of the feature is equated as the drop in performance.

In research papers, it’s typical to have a related work section with a survey of similar papers that are related to your research. When writing about permutation feature importance, I was always wondering whether or not to also mention feature selection methods.

Because seemingly methods for selection and importance overlap:

In theory, you could use permutation feature importance for feature selection.

Some feature selection methods produce “scores” for the features (e.g. correlation or mutual information with target variable), which could be interpreted as feature importance.

Models like LASSO, for example, are both used for selecting features, but also as sparse interpretable models.

Let’s look at both modeling steps more deeply.

Selection versus interpretation: different goals

Let’s first separate selection and interpretation (which feature importance is a part of) and figure out the goals of these two modeling steps.

In feature selection, our goal is to reduce the dimensionality of the input feature space. There are many methods to do so, ranging from filter methods based on correlation or mutual information to internal model constraints (like L1 regularization) and wrapper methods that train the model with different subsets of features to select the best one.

The underlying motivations to reduce the feature space are also diverse:

Improve predictive performance

Speed up the model training and prediction

Reduce the feature space for comprehensibility

Cost reduction

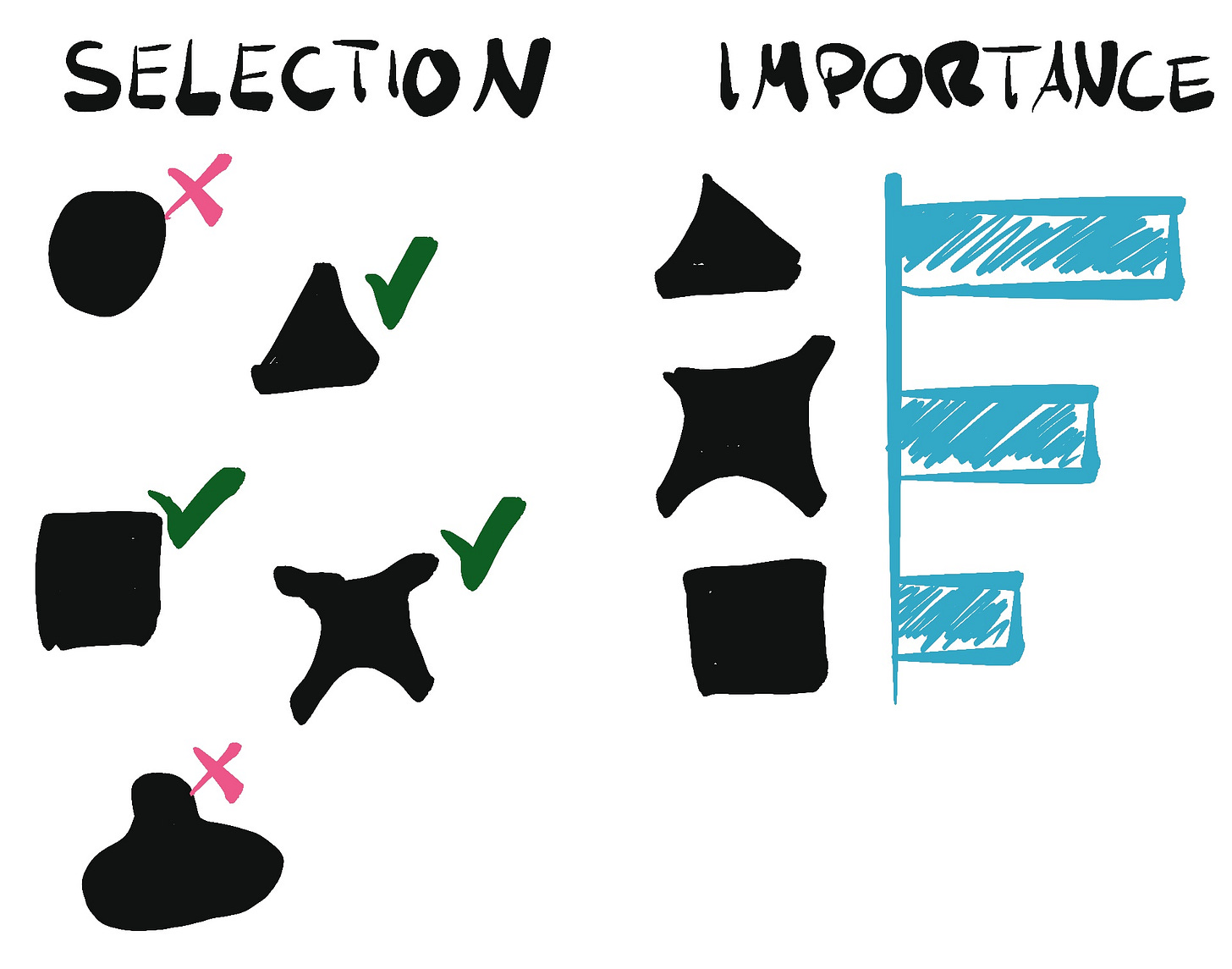

Feature selection reduces the number of features used in the model.

Feature selection is usually done either before training the model or as part of the model training pipeline.

Let’s turn to interpretation, especially feature importance. The goal of feature importance is to rank and quantify the feature’s contribution to the model predictions and/or model performance. What important means depends on the importance method that is used. The methods range from using “built-in” notions of importance like standardized absolute regression coefficients in regression models to permutation feature importance and SHAP importance.

The underlying motivations to understand feature importance can be:

Understand model behavior

Audit the model

Debug the model

Improve feature engineering

Understand the modeled phenomenon

Feature importance ranks the features

Both lists of motivations overlap: We can use feature selection to improve interpretability, but we can also use model interpretation to debug the model and do feature engineering which might impact also feature selection.

So are the two modeling steps entangled after all?

Treat selection and interpretation as different but complementary steps

My advice: Treat both as separate but complementary steps.

Feature selection is a pre-processing / model-constraining step that is mostly automated; feature interpretation is more of a post-hoc step that is more hands-on.

But that’s just the default where to start from. Because while selection and interpretation are separate steps, they are related.

Feature selection can be an important step that aids with the later interpretation. The fewer features we have in the model, the fewer plots to interpret, the fewer interactions, and the fewer correlated features. So if you find out that your model has too many features for a meaningful interpretation, it makes sense to enforce feature selection and reduce the number of features while also keeping an eye on model performance.

In addition, many feature selection methods throw out strongly correlated features. Perfect for interpretation, since correlated features can ruin interpretability.

Feature importance interpretation can also inform the selection step. However, I wouldn’t directly use feature importance measures for feature selection. We have enough methods to select non-performing features with feature selection. Feature importance like SHAP importance and others can, however, allow you to make qualitative decisions to remove a feature. For example because by inspecting it you realize it’s a collider or you notice the feature might make your model less robust etc.

For an overview of feature importance methods and other interpretation tools, my book Interpretable Machine Learning is a good resource.

You said something a bit self-contradictory at the end of your article: "I wouldn’t directly use feature importance measures for feature selection. We have enough methods to select non-performing features with feature selection. Feature importance like SHAP importance and others can, however, allow you to make qualitative decisions to remove a feature."

To me, the possibility to make qualitative decisions to remove a feature sounds very useful, and the suggestion that enough methods are available for feature selection seems somewhat random. So it would be very interesting to understand, why do you really not recommend using feature importance measures for feature selection. Is it because they are somewhat model-specific? Is it because many measures for feature importance are not reliable for strongly correlated features? Is there something else that I cannot think of right now?