Use interpretability to improve and debug your ML model

How I used feature importance to fix my model

Machine learning interpretability is one of the best tools for model debugging and improvement. Improving your model is one of the many goals that interpretability can help with, see also “Don't be dogmatic about interpretability-by-design versus post-hoc.”

Here’s how ML interpretability can make your model better:

Identify target leakage. A data pre-processing error might accidentally leak information about the target into a feature. Using feature importance methods you can identify suspiciously important features that might cause the target leakage.

Debug the model. An example: Finding errors in the way features are coded. If a feature dependence plot shows an increasing effect, but the feature is known to decrease the outcome, the feature may have the wrong sign.

Get ideas for feature engineering. By knowing which features are important, for example, through feature importance, you can know which features to focus on to create new informative features.

Understand which features to remove. Use LOCO (leave one covariate out) importance to determine how the error changes when you remove a feature.

Let’s go through a concrete example of how interpretable ML helped me fix a problem with my machine learning model.

How I identified a wrongly coded feature with interpretability

I currently participate in a machine learning challenge and use ML interpretability to debug and improve my models. The prediction target is water flow at 26 different locations in the Western U.S. accumulated between April and July. A tricky part of the challenge is that you have to make predictions of the water flow at different cut-off dates that start in January and go all the way to July. This means that the model has increasingly more information, and you would expect the predictions to be better when they are issued later in the year.

So, I was creating new weather-related features to help with the prediction. The model performance dropped a bit with the new features. I wasn’t alarmed yet. Since I hadn’t tuned the model but left the parameters the same the drop could also mean that additional features made it more difficult for the model to learn.

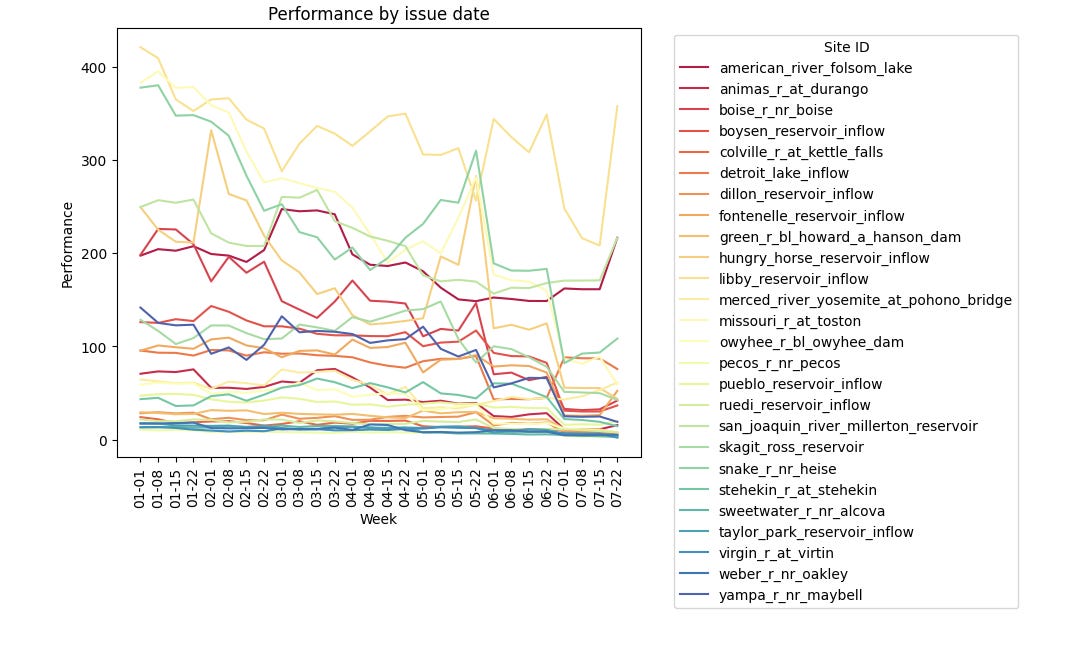

But I also monitored the evaluation metric by site and issue date. The general expectation is that the model loss is lower the closer we get to the end of July, since for these issue dates more informative features are available. But something weird happened:

For the last issue date, the loss was increasing immensely for most sites. Weird. This shouldn’t happen and I was alerted that probably there was some kind of error.

So I looked into the permutation feature importance for that last issue date. With a twist though: Instead of computing the PFI globally over all data points, I just shuffled the features for the group of the last issue date. The result: All the weather features had an importance of zero.

This helped me identify the problem: For the last issue date, I mistakenly introduced missing values for the weather features. I use LightGBM which can handle missing values so the issue didn’t show up during model training. However, the missing values in the weather features meant that for the last issue date, the model couldn’t rely on otherwise important features.

I could have found the error without ML interpretability. But that’s more of a hindsight look at the problem because I would have to specifically monitor the feature missingness by issue date.

But monitoring performance and interpretability outputs like feature importance gives you a good first indicator to ring the alarm bells if you make a mistake.

Which interpretability methods can you use? Any covered in my book “Interpretable Machine Learning” will be useful. I like to use permutation feature importance, SHAP, LOCO, and partial dependence plots.