How to get from evaluation to final model

We’ve all been there: You’ve set up a machine learning pipeline with tuning, model selection, and evaluation. Tons of data splitting, maybe with cross-validation. You are done modeling and need a final model to deploy.

Except, it’s not clear what the “final model” should be, since you have several options on how to proceed.

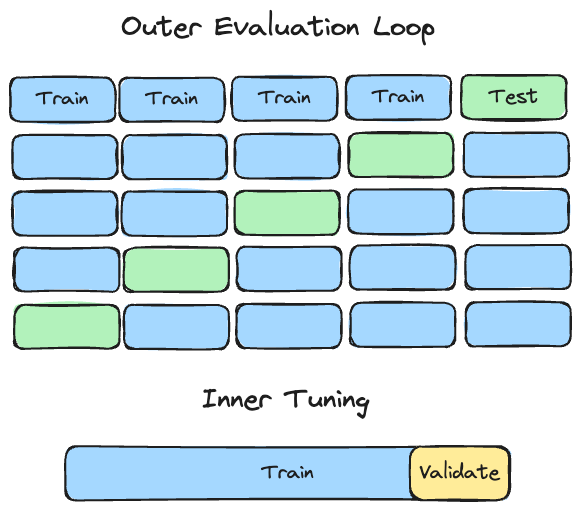

Let’s say you use 5-fold cross-validation to evaluate the model. The model is a gradient-boosted classification model (such as xgboost or Catboost). In each cross-validation iteration, the training data is further split into 80% for training and 20% for validation, which is used for tuning hyperparameters with random search. For more efficient data use you could alternatively tune with an inner cross-validation, but let’s keep it simple for this example.

Let’s say that you get 84% accuracy, which is estimated by averaging the performance over the 5 folds. 84% is good enough to deploy the model. But the pragmatic question is: What exactly do you deploy now?

And there’s a more philosophical side to the question: What does the 84% accuracy refer to? Ideally, whatever model we deploy in the end, we want it to be (at least) 84% accurate, but we also want to make good use of all of our data to make the model more robust and maybe even more accurate.

Let’s go through the options:

The Inside-Out Approach: Repeat what you did inside the cross-validation, but now use the entire data. In the example, we have split the data inside the CV into 80% training and 20% validation for tuning. Then now we take all of the data and train the model with the 80/20 split. The model with the best hyperparameters (highest accuracy) gets deployed. However, this new measure of accuracy is no longer unbiased because it’s based on the same data we used to tune the model. This approach is consistent with saying that the 84% accuracy applies to the entire model tuning process. Problems with this approach: The final model may have higher variance as it depends on a single training and validation split. We didn’t use all the data for training the model, which is another drawback. Also, we have increased the data size, so it’s unclear whether the 84% still holds, but typically, it should be better.

The Parameter Donation Approach: Use all of the available data to train the final model. For hyperparameters, use the best hyperparameter configuration from within the cross-validation. Ideally, the random search used the same random seed in all folds so that each hyperparameter configuration is evaluated across all 5 folds. While this approach allows you to train the model with the most data, there’s a conceptual problem: the hyperparameter selection is based on training with only 64% of the overall data (0.8 x 0.8). Hyperparameters are about regularizing the model but the amount of regularization needed depends on the size of the training data. It’s unclear what happens with the performance and it might even get worse than the 84%.

The Ensemble Approach: The 5 models from cross-validation are already your final model. To predict a new data point, simply take the predictions from the five models and average them. This approach is consistent with saying that the evaluation was specific to these five models. Problems with this approach: Makes deployment more difficult as you now have to deploy an ensemble. It’s also unclear whether the accuracy is still 84% after averaging the predictions for new data, but I would expect the ensemble performance to be equal to or better than for a single model.

There are even more approaches:

Lazy approach: just deploy one of the 5 models from cross-validation.

A combination of inside-out and parameter donation: First you follow the inside-out approach and retrain the model using the training/validation split that you used within CV before. Then you take the best hyperparameter configuration and train a model using all data for training.

The Fuck-It Approach: Ignore all previous results. Train a random forest using all data. No evaluation. Deploy it. Call it a day.

None of these approaches is perfect, and each has its own trade-offs. It gets even more annoying with more complicated splitting setups like repeated nested cross-validation or conformal prediction.

So unfortunately I don’t have a clear answer on the best way to go from modeling to the final model.

What’s your approach to getting from evaluation to final model?

In Neural Networks, weight averaging the five models can sometimes work pretty well as an alternative to ensembling (especially if you use techniques like git rebasin).

Keeping a held-out test set to pick the best method among the ones you presented can also help.

In high-stakes situations, where small performance gains might mean a lot of money, here is what I did: instead of evaluating a model, I'd evaluate the whole training-validation strategy.

Assume you are comparing inside out vs parameter donation: you can simulate how those two strategies would perform out of sample and out of time by replaying your dataset over time, like a time series validation, and applying those strategies to all the data you have until then and then testing the final model against the next period of time. You can break the time intervals by week, month, quarter or year, depending on your problem.

If parameter donation wins, then you can be confident it's the best strategy to use, even if you cannot make a meaningful claim about the test error! You can even quantify how much you expect it to be better than the other strategies in expectation. This allows you to trade-off the risk of uncertain test results with the potential performance gains of training with more or fresher data.