Interpret Complex Pipelines By Drawing A Box

Changes to your modeling process, like using PCA, can destroy interpretability. Here's how to leverage model-agnostic interpretation for arbitrary pipelines.

After a day of hard work you have a nice prediction model:

Not only does model performance seem good, but the shallow decision tree is interpretable. Interpretability is a requirement for this project, so you got all bases covered.

You decide to “try one more thing”. That thing is PCA (principal component analysis) to transform the features.

Good news: performance improves a lot.

Bad news: PCA components can be nasty for interpretation.

Meh.

But the improvement in predictive performance can’t be ignored.

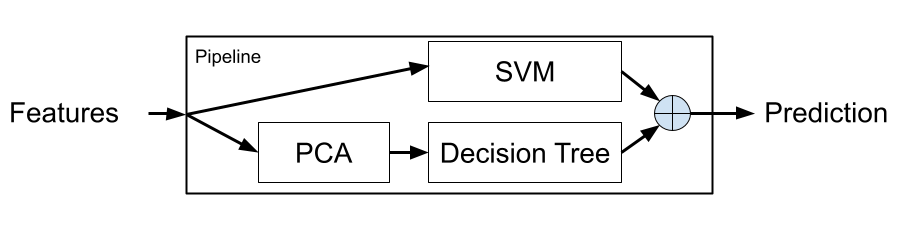

And of course, you even manage to come up with a more complex model. Your boss shouldn’t have given you so much time to fiddle around with the model.

The prediction is now the average of the predictions from the decision tree and from a support vector machine (SVM).

The predictive performance is much better than your initial model. Earlier, you might have managed to make the PCA components interpretable, but adding that SVM is a real blow to interpretability.

Bummer.

Is all hope lost for interpretation?

Of course not. Two pieces of advice to the rescue:

Think in pipelines, not in models.

Interpret pipelines with model-agnostic interpretation tools.

Let’s dive in.

Think In Pipelines

Think of a prediction model in terms of plumbing.

Into the pipeline flow the features. They are piped through different modeling steps like transformations, unions, different models, prediction stacking, and so on. And out bubbles the predictions.

The flow can be forked (model ensembles) and recombined (feature union, averaging predictions).

Technically, a pipeline reduces multiple modeling steps to one interface. If you use sklearn Pipeline, you might have already internalized the idea.

Models Are Often Pipelines

What we refer to as a model is often already a pipeline of multiple steps that were just bundled together:

A random forest is an ensemble of decision trees. Data is piped to different trees and the predictions of the trees are averaged.

Regularized linear models (like LASSO) sometimes have feature scalers already integrated.

You could see every layer of a neural network as a piece of a pipeline because each layer is akin to a feature transformation step. When embeddings of a network are used in a different model, this image of a pipeline becomes even clearer.

Coming back to your example: we shouldn’t see the SVM or the tree as our model(s). Instead, we should think of the entire pipeline as the model. Because that will help immensely when it comes to interpretation.

You should see every predictive model as a pipeline

Interpret “The Box” With Model-Agnostic Interpretation

The interpretability of the decision tree in our example is definitely lost. And even if we would make sense of the PCA components, it would not explain the final predictions because these predictions are averages of the tree and SVM.

But what about model-agnostic interpretation methods such as Shapley values, permutation feature importance, and partial dependence plots?

Model-agnostic interpretation methods only require manipulation of input and observing how the outputs change. No access to model internals (like the splits of the decision tree) is required.

But it would be meaningless to apply a partial dependence plot to the decision tree — we would run into the same problems as we do when interpreting the structure of the decision tree.

But model-agnostic interpretation methods don’t care what the model is.

Model-agnostic interpretation methods can also interpret arbitrary pipelines.

Because model-agnostic interpretation methods essentially just need a box with inputs and outputs.

So we just have to draw the box differently.

Don’t draw the box around the model. Draw it around the entire pipeline.

In our example, you would draw the box around the entire pipeline so that the input to the box is the features and the output is the prediction. Similar to when we just had the decision tree.

Now you can apply any model-agnostic interpretation method you like:

How important is a feature? Use, for example, leave-one-covariate-out (LOCO)

How does changing a feature change the prediction? Use accumulated local effect plots.

Explain an individual prediction with SHAP.

It doesn’t matter that SVMs are not inherently interpretable.

It doesn’t matter that the prediction is a blend of two models.

To the model-agnostic interpretation method, the entire pipeline is the “model” to be interpreted. That doesn’t mean you should always interpret the entire pipeline, but rather draw the box around the parts of the pipeline where it makes sense.

Tips For Drawing The Box

Unsure where to draw the box? For example, sometimes feature transformations are needed to make the feature interpretable in the first place, like scaling it to [0,1].

Here are some tips for where to draw the lines of the box:

Make sure that the inputs to the box are interpretable.

Move all non-interpretable feature transformations inside the box.

The output should also be on an interpretable scale. For example, use probabilities instead of raw scores.

Even modeling steps that are not learned from data can live inside the box, like a log transformation.

Technically, many interpretation libraries allow you to provide a custom prediction function. This is how you can technically apply model-agnostic interpretation to pipelines, or arbitrary boxes. For example, shap allows you to directly interpret a sklearn transformers.pipeline and my iml package allows for providing custom prediction functions.

In Other News

My book Modeling Mindsets is now available in paperback. It has a handy size. Perfect to take with you when you travel. It also makes for a great gift to a colleague that might profit from a more open mind on modeling data. 😉

Whenever you are ready

Read my book interpretable machine learning (for free).

I’m experimenting with interpretation cheat sheets. Check out logistic regression and SHAP plots.