When you prompt ChatGPT to suggest meme formats, you will likely get “Drake Hotline Bling”.

The “Distracted Boyfriend” Meme is often the second in ChatGPT’s output sequence.

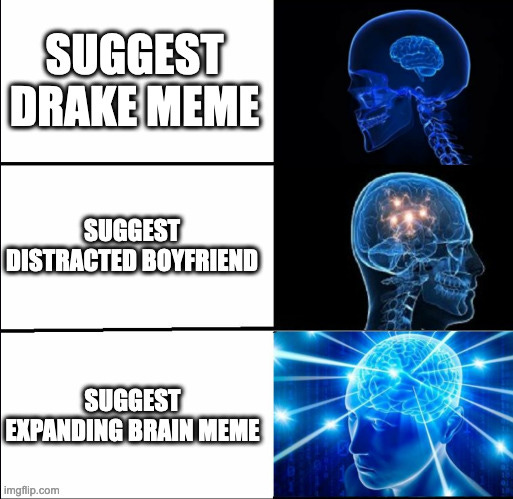

And sometimes, when you prompt ChatGPT to “think hard” it will likely suggest the “Expanding Brain” meme format:

ChatGPT suggests a few more meme formats, but the Pantheon is small. Try it for yourself. If you try hard enough, you can prompt ChatGPT to produce a more fringe meme, but you almost have to aim for that meme.

Meme Collapse

ChatGPT’s problem is that it “loves” the cozy center of the distribution. Large language models output the next likely token. And if the input text has the token sequence “m” + “eme” in it, I assume the probabilities for “dr” and “ake” rise strongly. Much more than for “squ” + “id” + “ward”, for example.

The reason is the training data: Drake and Distracted Boyfriend are popular. If you look at the top Meme Templates on imgflip, these are the all-time top memes:

Even the few other meme templates ChatGPT suggests are typically on the first page of popular memes. Not much of a memelord, our ChatGPT.

You can play around with the temperature of LLMs, which changes how evenly the probabilities are spread for sampling the next token, to make LLMs more creative. When I increased the temperature, I found that GPT-4 suggested slightly more creative memes, but the higher the temperature the more likely the LLM output was to derail and end in a string of garbage.

Model Collapse

The story is much bigger than memes. Generative AI narrows down not only our memes to the most likely. Generated images, for example, all have the same default vibe. Generated texts all have that flowery language by default. You can prompt away from that center of gravity, but it’s an effort and you probably still don’t get into the tails of the distribution.

The more the Internet consists of AI-generated texts, the narrower the distribution of future training data becomes. LLMs trained on this text may become even more narrow, starting a downward spiral. This is called model collapse: Tails of the distribution are thinned out and disappear. The distribution becomes narrower over time.

OpenAI released ChatGPT shortly after I had decided to become a full-time writer. I doubted my career choice for a few weeks. AI influencers spread wild extrapolations of LLM abilities. And LLMs did get better — to a degree. However, the meme/model collapse is a serious problem, and I don’t see how it can be avoided. In a way, it’s also a good opportunity for us to be creative and original, and to push the boundaries.

In other news

Timo and I published a new chapter of our book Supervised Machine Learning for Science. And, good news: You can read it for free! The entire book, actually:

The new chapter is about how to report the results when using machine learning in applied science. The chapter covers resources on what to include in the paper, and how to document your model and dataset.

As always, a refreshing insight. I really do not like a lot of the newsletters out there, but this one is always interesting and helpful! And thanks for putting out your book for free!

Nicely put! I've always thought it was far-fetched whenever people said AI would destroy writers, artists, animators [insert any creative professional] jobs.

Creativity is all about thinking outside the box, and models based on statistics are made to fit the box as much as possible as far as I understand it.