The Galactic Guide to SHAP Values

Exploring SHAP values through a universe analogy

Want a deeper dive? Check out my book on SHAP which provides theory and hands-on examples to explain your machine learning models!

1 week left until I’ll publish my new book on SHAP for interpreting machine learning models 🤩 For today’s post, we’ll dive into my favorite analogy for how SHAP explanations may be interpreted.

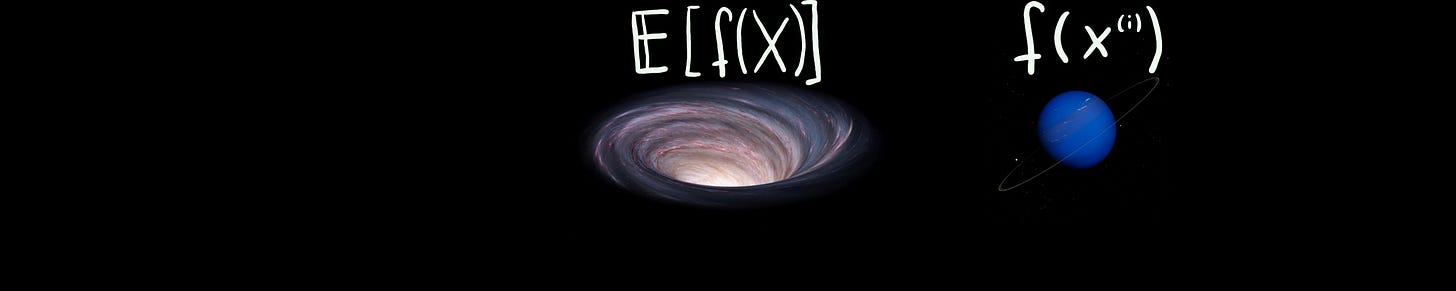

We start with a one-dimensional universe. Objects can move up or down. For better display, we move them left (=down) or right (=up).

There are only two objects in this simplified universe

A center of gravity

A planet

But what does this have to do with SHAP and explaining the predictions of a machine learning model?

The center of gravity is the expected prediction for our data E(f(X)). It’s the center of gravity in the sense that it’s a “default” prediction, meaning if we know nothing about a data point, this might be where we expect the planet (=the prediction for a data point) to be.

But then we have our actual prediction f(xi), which may lie outside the center of gravity.

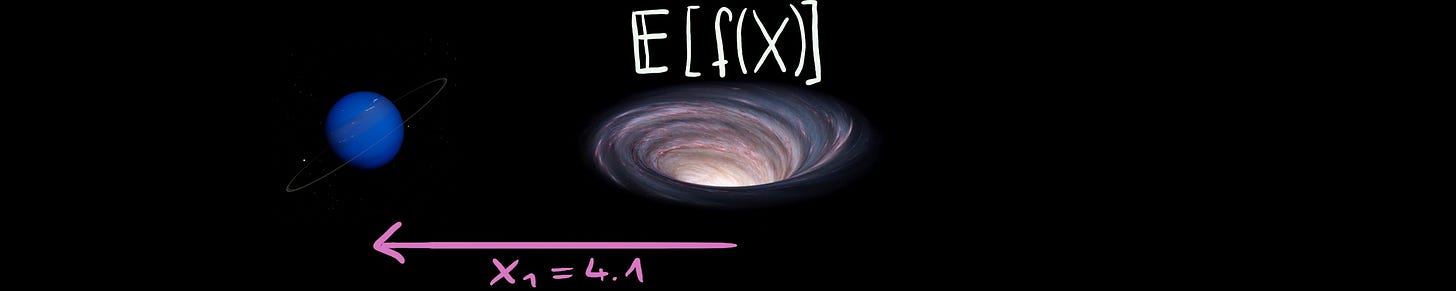

The planet can only move away from the center of gravity if forces act upon it. The forces are the feature values. Let’s say we know one of the feature values, maybe x1, with x1=4.1 and this acts upon the prediction and pushes the planet downwards.

This force is what we aim to quantify with SHAP values.

But this force alone moves the prediction to an incorrect location because the prediction (aka position in the universe) is located elsewhere.

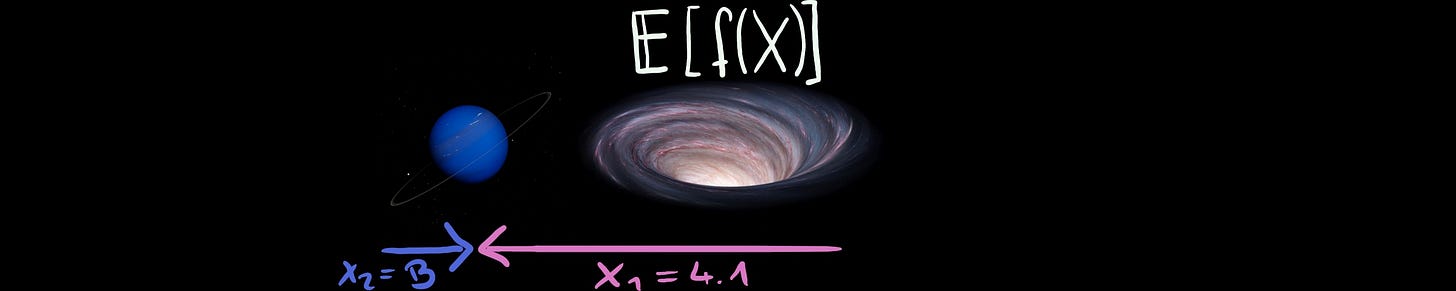

That’s because we have only considered one of the forces, but there are multiple acting on that prediction, equal to the number of features in the machine learning model.

So we have to also consider other forces. Like X2, which pushes maybe upwards:

The prediction in the end is a kind of equilibrium. When we consider all the forces that act upon the prediction, they add up to the difference between the prediction and the expected prediction E[(f(x)]. This is called the efficiency axiom in SHAP values.

Also, note that SHAP knows no “order” in which we add the features, this was only to build up the analogy.

I didn’t pull this analogy out of thin air: In the shap Python package, there’s the force plot, which uses the analogy of forces to visualize SHAP values:

In the force plot, the E[f(X)] is referred to as the “base value”.

I prefer the waterfall plot over the force plot because I think it’s easier to read:

We can see the features as “forces” that push the prediction potentially away from the mean (However, they may cancel each other out). SHAP values indicate which features need to be preserved to avoid regression to the mean.

Want to learn more about SHAP? My book Interpreting Machine Learning Models With SHAP is a comprehensive guide!