The SHAP Book is Available in Print 🥳

Interpreting Machine Learning Models With SHAP is now also available as a paperback (in color)! 🎉

What’s SHAP?

SHAP, an explainable AI technique, is a method to compute Shapley values for machine learning predictions. It’s a so-called attribution method that fairly attributes the predicted value among the features.

SHAP is like the Swiss army knife of machine learning interpretability:

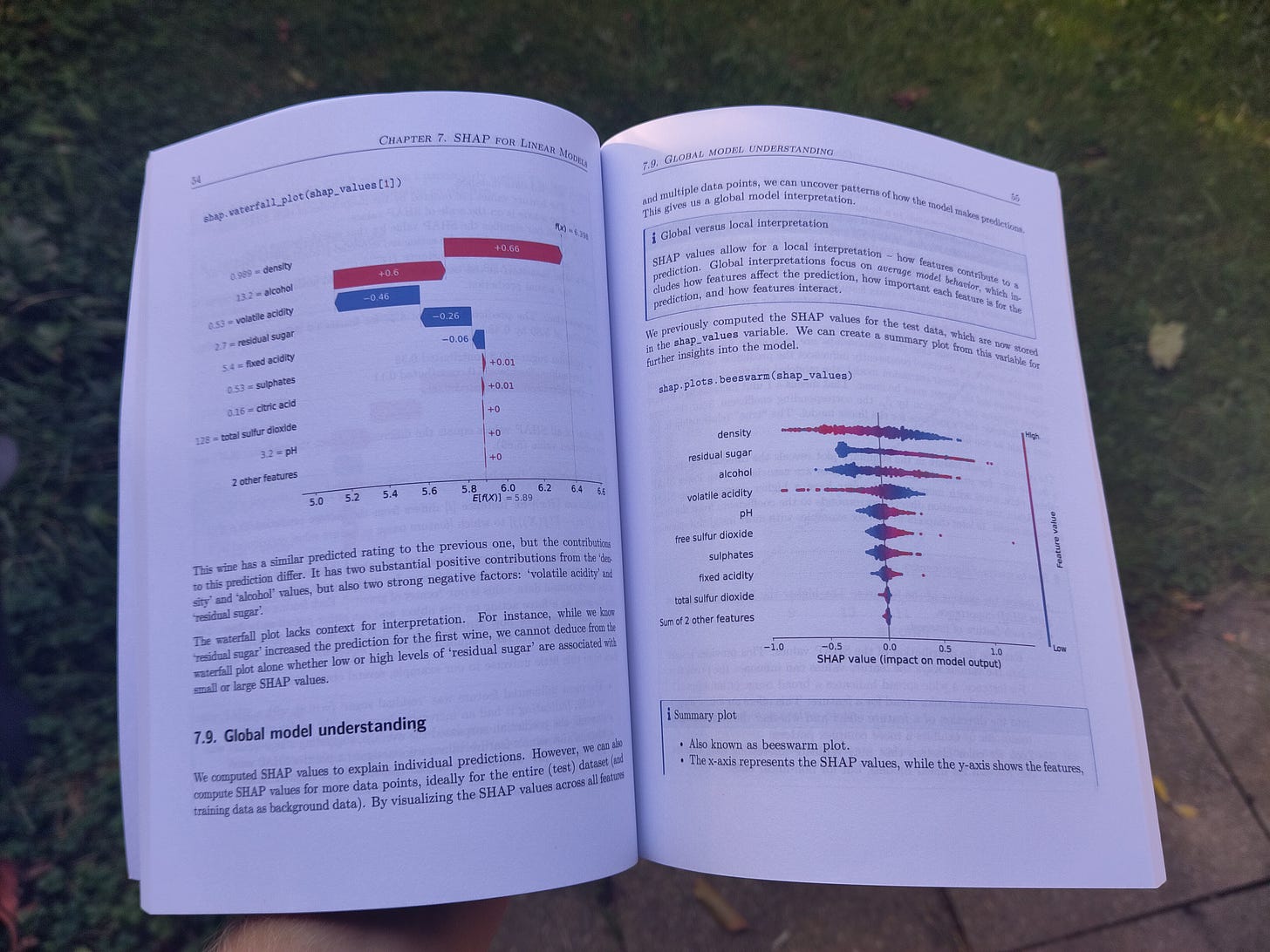

SHAP can be used to explain individual predictions.

By combining explanations for individual predictions, SHAP allows to study the overall model behavior.

SHAP is model-agnostic – it works with any model, from simple linear regression to deep learning.

With its flexibility, SHAP can handle various data formats, whether it’s tabular, image, or text.

The Python package

shapmakes the application of SHAP for model interpretation easy.

The SHAP book

This book will be your comprehensive guide to mastering the theory and application of SHAP. It starts with the quite fascinating origins of SHAP in game theory and explores what splitting taxi costs has to do with explaining machine learning predictions. Starting with using SHAP to explain a simple linear regression model, the book progressively introduces SHAP for more complex models. You’ll learn the ins and outs of the most popular explainable AI method and how to apply it using the shap package.

This book is for data scientists, statisticians, machine learners, and anyone who wants to learn how to make machine learning models more interpretable. Ideally, you are already familiar with machine learning to get the most out of this book. And you should know your way around Python to follow the code examples.

This book takes readers on a comprehensive journey from foundational concepts to practical applications of SHAP. It conveys clear explanations, step-by-step instructions, and real-world case studies designed for beginners and experienced practitioners to gain the knowledge and tools needed to leverage Shapley Values for model interpretability/explainability effectively.

– Carlos Mougan, Marie Skłodowska-Curie AI Ethics Researcher

This book is a comprehensive guide in dealing with SHAP values and acts as an excellent companion to the interpretable machine learning book. Christoph Molnar's expertise as a statistician shines through as he distills the theory of SHAP values and their crucial role in understanding Machine Learning predictions into an accessible and easy to read text.

– Junaid Butt, Research Software Engineer at IBM Research

As in all his work, Christoph once again demonstrates his ability to make complex concepts accessible through clear visuals and concise explanations. Showcasing practical examples across a range of applications brings the principles of SHAP to life. This comes together as a thought provoking, informative read for anyone tasked with translating model insights to diverse audiences.

– Joshua Le Cornu, Orbis Investments

I was never bored diving into the world of SHAP values through this book, as it strikes the perfect balance between depth and understandability. Its rich, real-world applications, presented in an engaging manner, have truly elevated my appreciation for the topic. I'd highly recommend this book to my colleagues

— Valentino Zocca, AI expert and author of Python Deep Learning

Or the e-book version from Leanpub.