Why Machines Learn: The Elegant Maths Behind Modern AI by Anil Ananthaswamy is quite an unusual book.

This post is a short review of the book, but with a disclaimer: I viewed the book from more of a writer’s point of view, which means I didn’t read the book front-to-back, but analyzed scope and style.

I came across the book on Twitter (some call it X). It caught my eye since it’s an unusual mix of machine learning history, theory, and math.

Popular science books simplify a complex topic for a broad audience without much knowledge of the subject. Technical/academic books are written for experts (or students who want to become experts) and don’t shy away from deep math and theory. Why Machines Learn is a combination of both.

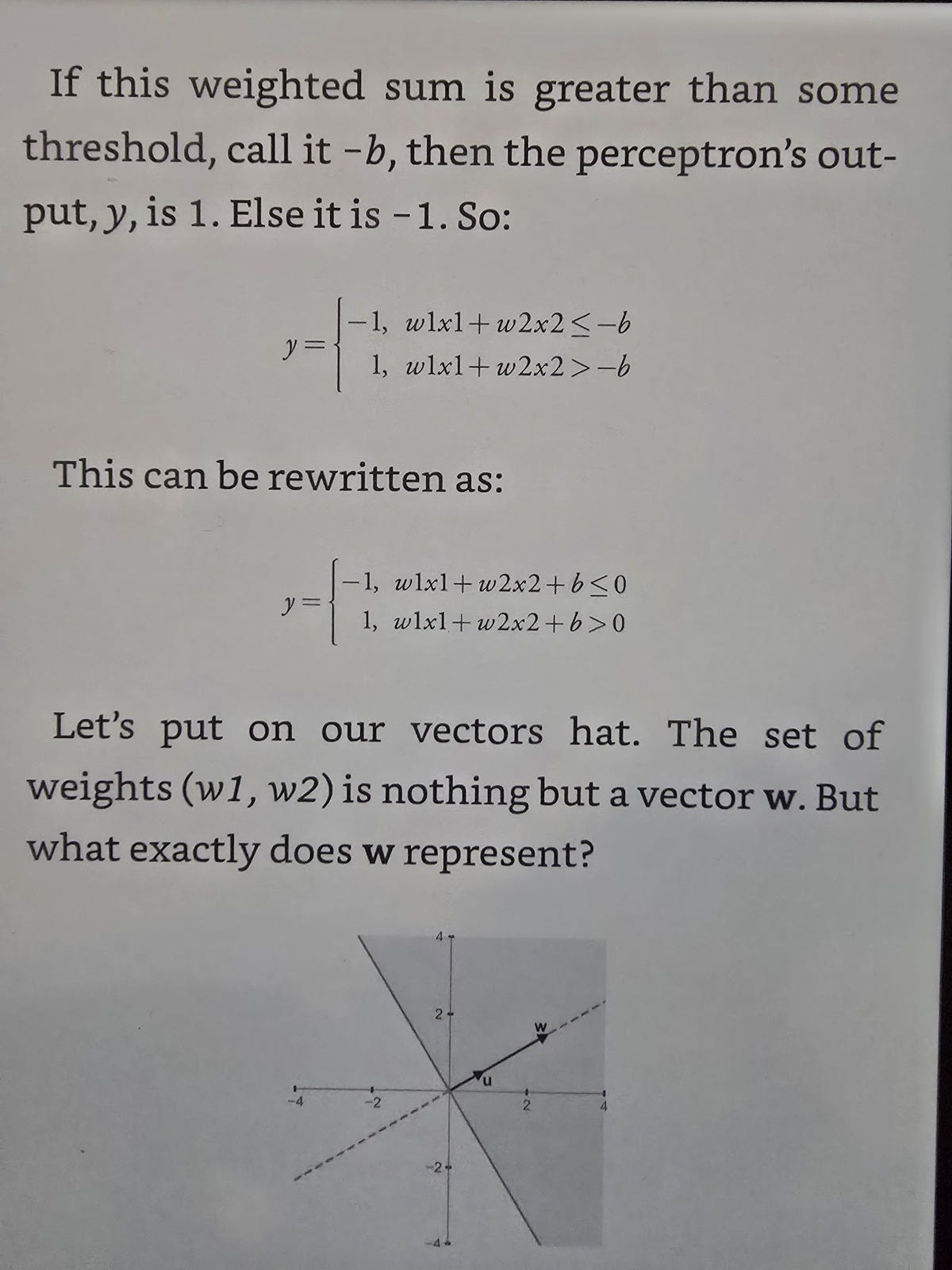

Most chapters begin with stories about people, typically machine learning researchers, and read like a popular science book. These parts are well-narrated, casual, and engaging. As the chapters get deeper, the book confronts you with math. Lots of math. It explains all the steps from scratch, but it’s not just a formula here and there. For example, Chapter 2 begins with a story of the Irish mathematician William Rowan Hamilton writing a letter to his son. A few pages later, we are multiplying vectors and learning about the dot product.

I find this to be a rather unusual mix, and I couldn’t say who the target audience is. Someone who wants to learn the math behind machine learning might take a more direct path without the narrated parts. Someone who wants to learn about the history of machine learning might be put off by the math.

However, the book has received many positive reviews, so there are certainly many people for whom the book is a good fit.

Writing a book is a balance between writing something you love to write about and writing something others want to read. A lot of the advice I’ve read leans heavily toward designing the book around what you think the reader will want to read. However, for me at least, it’s not so easy to anticipate. For example, I still fail to predict how well any particular Mindful Modeler post will be received. I thought my post on Meme Collapse would do better, and I didn’t at all expect that my post My perfectly imperfect note-taking system for ML papers would do so well in terms of reads and likes.

I feel that Anil Ananthaswamy took a risk. He even mentions in the book that his initial motivation came from hearing a lecture on the perceptron convergence proof. I speculate that this book was a book he wanted to write, taking the risk of potential difficulties in defining a target audience.

I find that very refreshing. I struggle with the balance between what I want to write about and things I imagine that my readers will want to read. Sometimes to the point of analysis paralysis. My takeaway from Why Machines Learn is to take more risks in my writing.

'Why' seems like a big ask. In biology that means understanding the deep eco/evo/devo tradeoffs as well as the how at the cell-molecular level. What does it mean for modeling as a field?