No learning without randomness

Randomness is not a nuisance, but an elemental mechanism in machine learning

We call it noise, volatility, nuisance, variance, non-determinism, and uncertainty. We have to work hard to keep randomness under control: we try to keep our code reproducible with random seeds, we have to deal with missing values, and sometimes we struggle because the data is not such a good random sample.

But there’s another side to randomness.

Without randomness, there would be no machine learning. In this post, we explore the role of randomness in helping machines learn.

You can find randomness in a lot of places:

The random forest algorithm uses randomness in two places: Bootstrapping and column sampling. Both mechanisms introduce variance across the learned trees to improve the predictive performance of the tree ensemble.

Stochastic Gradient Descent (SGD) is how many deep neural networks are optimized. Batches of data are randomly drawn, and the network's weights are updated batch by batch. SGD helps the network get unstuck from local minima and also implicitly regularizes the model.

For hyperparameter tuning and evaluation, we randomly split the data into training, validation, and testing (in the simplest version). This is the foundation for allowing models to generalize across the distribution of the data.

Weights in neural networks are randomly initialized.

Data augmentation relies on random modifications of the data.

XGBoost has hyperparameters to sample columns and rows.

And there are plenty more examples in ML where randomness is a central tool for learning.

But why is randomness so essential? I have already mentioned the purpose of randomness in some of the examples: enabling generalization, regularizing the optimization process, and escaping local minima.

However, I hadn’t thought about randomness as an essential tool, probably because the use of randomness is either built-in to the algorithms themselves, e.g. random forests and neural networks, or it’s a best practice like randomly splitting data into training, validation, and testing.

But it’s worth thinking about the use of randomness more proactively. I now see randomness as a way to introduce inductive biases into learning algorithms and also as a tool to align the ML models to the data-generating process. For example, you want the training/validation/testing split to reflect how the data are produced when the model is used for predictions. If you forecast sales of stores and want the model to work for new stores, you should split the data by store and not treat the rows independently. But this goes even further: Maybe you don’t want the ML algorithms to sample rows and thereby ignore the entity structure. See also my post on dealing with non-IID data.

In Other News

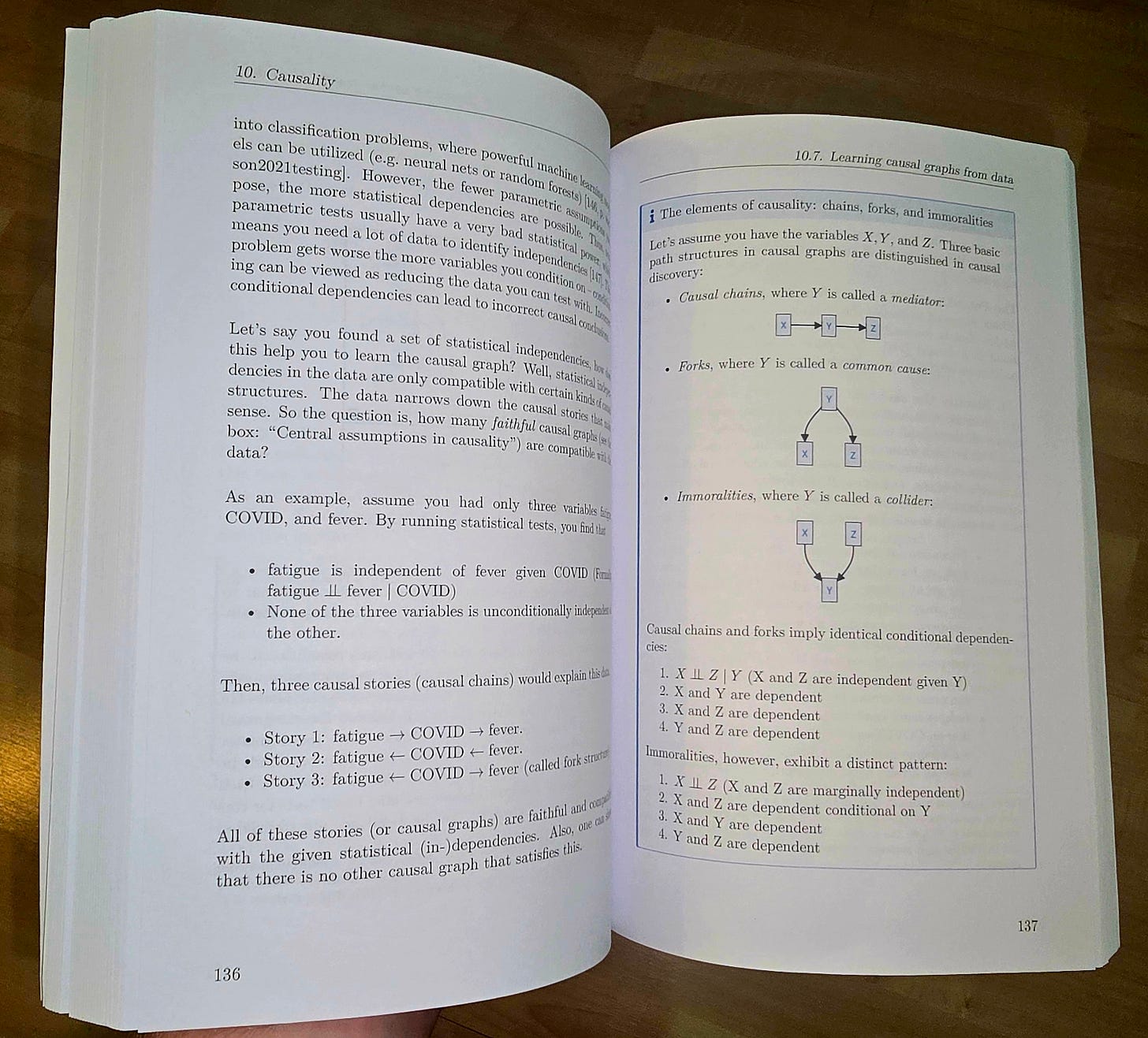

Writing a book doesn't feel real to me until I hold the physical copy in my hands.

Supervised Machine Learning for Science is now in its final stages, and we are smoothing out the last wrinkles and making sure that all the versions, from ebook to paperback, look good. With random Nobel Prizes from other fields now going to machine learning researchers, I think it’s time that Timo, my co-author, and I publish this book.

In this book, we justify the use of machine learning for science but also present many approaches such as interpretability, causality, and robustness to address insufficiencies to make ML a great tool for science.

The release of the ebook and the print version is planned for November 4th. You can already read the book for free here: ml-science-book.com/.

Can't wait to read the book

I saw this in DataScienceWeekly… and loved the title. I clicked and laugh when I saw it was your post. I love your writing, and also can’t wait to read the book.

I think you’ll appreciate this post on LinkedIn I wrote about randomness. https://www.linkedin.com/posts/frankxc_nassim-taleb-why-you-should-embrace-uncertainty-activity-7225111850563112961-xFrF?utm_source=share&utm_medium=member_ios