Why it's hard to make machine learning reproducible

One of my most stressful projects was about evaluating hospitals. I joined the project late since a deadline was approaching and they needed help (I was a student at the time).

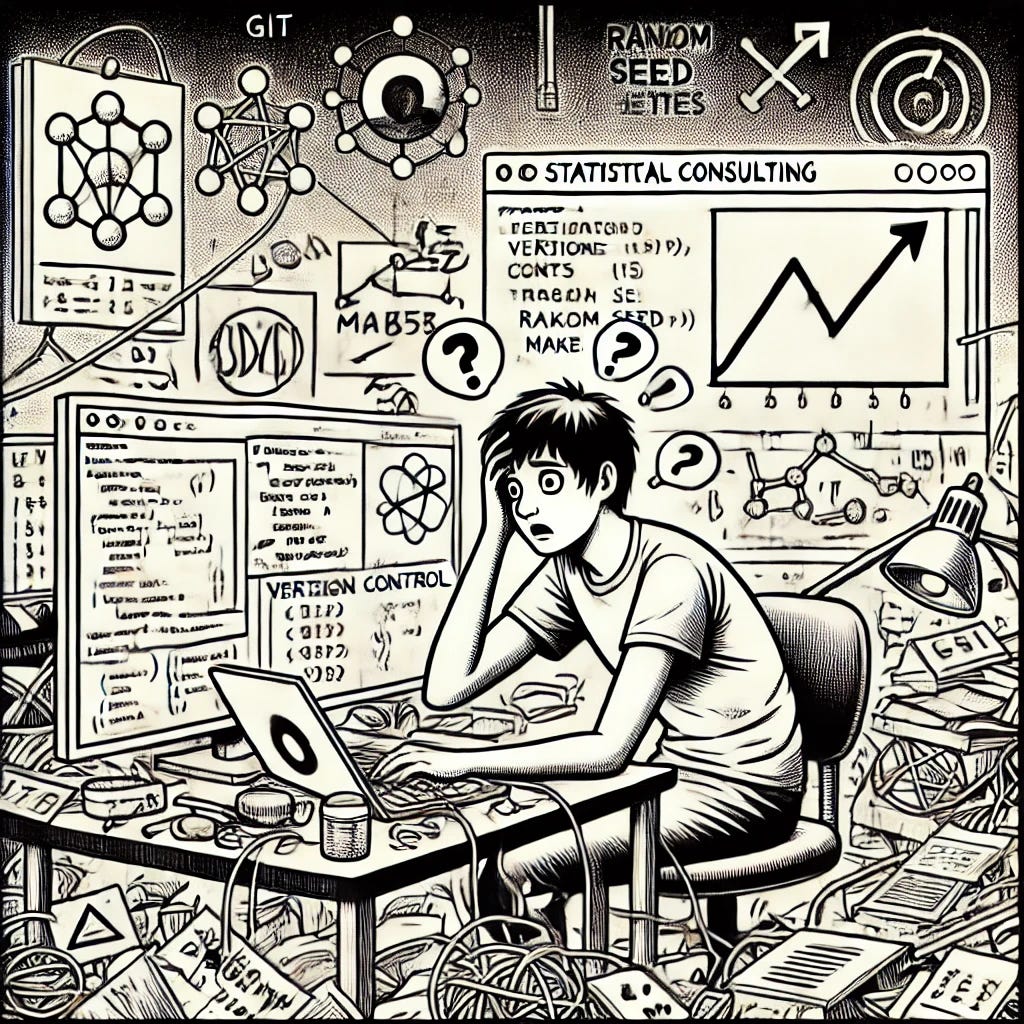

Most of my work was about writing code and there was already a large code base. A messy one with little documentation. No version control. No automation (like a Makefile). It was stressful: For example, I lost 2 hours just because R’s sample function was overwritten with another function called sample which worked slightly differently, so I didn’t get an error, but just weird results.

Well, somehow I got through this project. A year later, the project lead approached me again: There was one object (I think it was a weight vector for sampling) for which he no longer knew how it was produced. The object was just saved on disk, but no scripts/code associated with it could be found. I didn’t know either where it was coming from 😅.

That’s my little horror story about the importance of reproducibility.

Reproducibility sounds so easy: Your results are reproducible when you run the same code on the same data and get again the same results.1 Also called computational reproducibility. There are many strong reasons why you want computational reproducibility:

You submitted a paper for which you trained an ML model. A year later reviews are back and reviewer 2 asks to retrain the model.

You update your model in production and want to be able to roll back to an older version at any point in time.

You pick up a project a year later and want to be able to reproduce everything.

You want others to build upon your work.

Computational reproducibility is hard. The typical recommendations for making your work reproducible are using code wherever possible (reducing “manual” steps like clicking through some software), documenting code, a good data management system, version control, stabilizing the computing environment (conda, Docker, …), and so on.

When we add machine learning to the mix, reproducibility gets harder. For our book Supervised Machine Learning for Science, I’m taking a deep dive into reproducibility in machine learning. Here are some of the reasons that make reproducibility more challenging in ML:

Training a machine learning model is usually non-deterministic: Data is split into training, testing, and validation at random. Another example is random weight initialization in neural networks. Just set a random seed, they say. But there are pitfalls: For example, when training models in a distributed setting, random seeds might not work as intended.

Large datasets pose additional challenges: The larger the data, the more difficult the data management becomes. And the more difficult it may become to reproduce the project/model in a different computing environment.

Long training times: The way I ensure that my project/training is reproducible is to run it twice and check that the outcome is the same. For larger ML projects with very long and expensive training times, this isn’t feasible. So you have to make sure beforehand that e.g. all random seeds are set and so on.

Jupyter notebooks make it easy to run into reproducibility issues. While you can track notebook changes with git, it’s messy since notebooks contain a lot of overhead like cell outputs. A larger threat to reproducibility is hidden states and non-linear execution of notebooks: Notebooks invite you to execute cells individually. And you can change cells at any time. With notebooks, you can create quite a mess and find yourself in a situation where you don’t know in which order to run the cells and some crucial cells may even be overwritten or deleted.

Using proprietary algorithms and APIs: If you rely on platforms with custom implementations of ML algorithms, it might be difficult to impossible to reproduce results in the future. The company may change the algorithm at any time or may go bankrupt.

LLMs combine all the problems of APIs, large data, long training times, and non-determinism.

Do you have any “horror stories” with reproducibility in machine learning or other data-related projects? Or any additional reasons why reproducibility is challenging for machine learning?

There are many different definitions of reproducibility. I’m using the one suggested by The Turing Way Book.

I would recommend to read: *Guerilla Analytics* by Enda Ridge. Some good war stories and great wisdom on this topic. For disclosure I know Enda well.

Few words, fundamental concepts and very clear writing for a much appreciated piece on a topic I wasn't very familiar with. Writing in this way, reducing doubts and explaining things in a crystal clear way to those who do not have a technical background but at the same time maintaining the specificity and 'technicality' of what is being said is not easy, but I think this issue is particularly inspirational on this one.