Week #4: Overview Of Conformal Predictors

Conformal prediction is not just 1 method, but a general recipe: understand once, apply everywhere

Reading time: 5 min

Welcome to course week #4 of Introduction to Conformal Prediction (see #1, #2, #3).

This week, we’ll learn about

an overview of conformal predictors

and the best way to stay up to date with new research

In week 1 we learned about conformal classification and in week 3 about conformal regression.

While regression and classification are common machine learning tasks, they are a tiny subset of the many tasks machine learning can do. What about other tasks? Can we use conformal prediction for image segmentation, survival analysis, and time series forecasting?

The good news is: For many machine learning tasks we have conformal methods.

But there’s bad news: The implementations of these algorithms — naturally — are always a little behind. Since many papers are quite new (2020s), not all these methods are established, software-wise. For example, MAPIE “only” implements regression, classification, and time series forecasting. If you are lucky, researchers publish code with their papers, but it’s not the same as having a well-documented, well-tested, and well-implemented library such as MAPIE.

How to quickly categorize a conformal prediction method

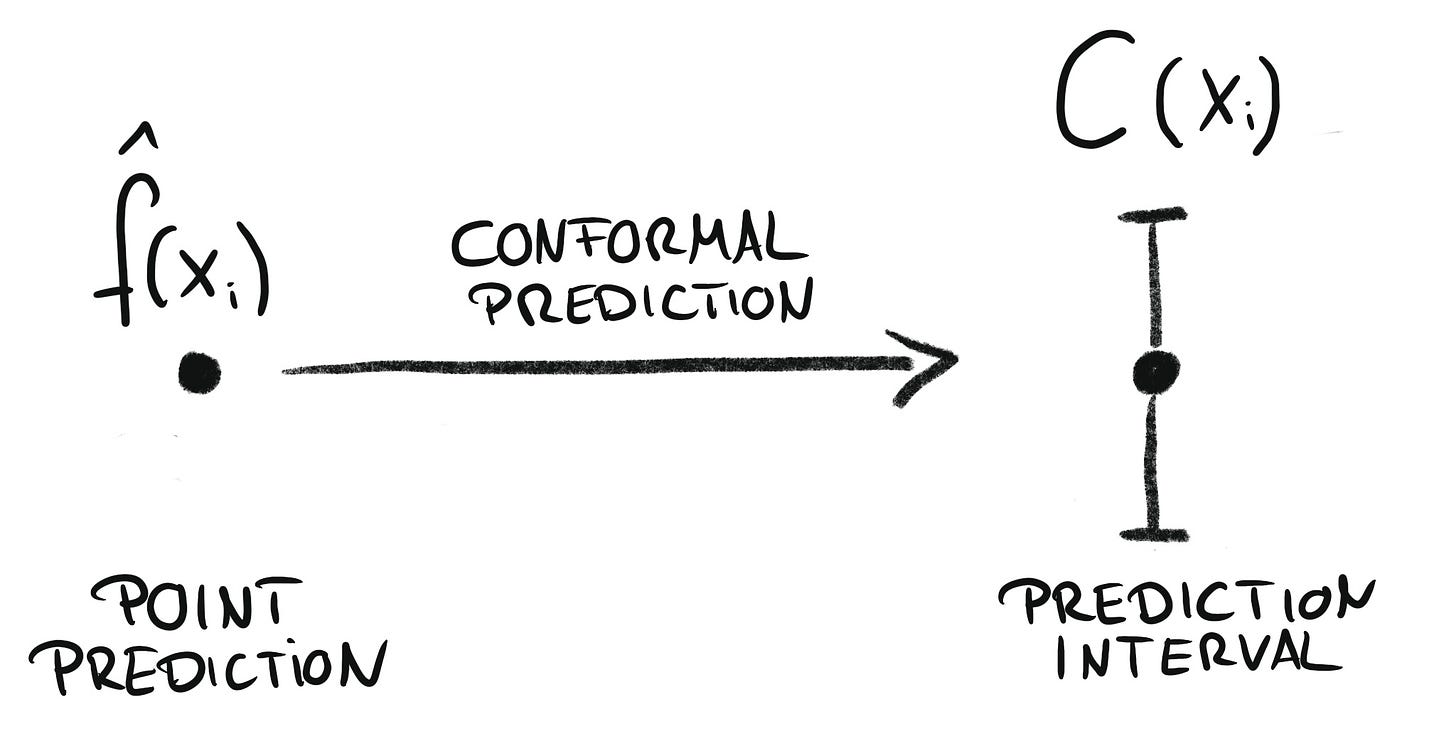

A useful image to keep in mind is that conformal prediction produces prediction regions:

But what a region looks like can differ a lot between different machine learning tasks (e.g. sets for classification vs. intervals for regression). And even for a task like classification, there are different non-conformity scores that can be used which also leads to different conformal prediction algorithms.

When you learn about a new conformal prediction method, these are the questions you should ask:

What machine learning task is the conformal prediction method for?

Which non-conformity score was used?

How does the method deviate from the default “recipe” of conformal prediction?

An example: Regularized Adaptive Prediction Sets (RAPS)

From the paper abstract, we can quickly see that RAPS is for classification. And by further reading the paper we find that as a non-conformity score the cumulative probability scores are used. The same as for APS (adaptive prediction sets). Both approaches differ, however, since RAPS uses regularization during the calibration step to filter out noise classes.

The following collection of conformal procedures (sorted by task) is just to give you an impression of the huge variety that’s out there and by no means an attempt at an exhaustive overview. For better overviews, I’ll recommend some awesome resources later in the post.

Let’s get started.

Some Conformal Predictors By Task

Classification

For classification, conformal prediction turns classifications into classification sets.

Conformal classification algorithms usually work with the (probability) score output of classification models. There are many different conformal classification algorithms that use different non-conformity scores or adapt the procedure to e.g. use regularization: we have the score method, top-k, adaptive prediction sets, and regularized adaptive prediction sets. All are implemented in MAPIE.

Probability Calibration

When you think about calibrating the output of a classification model that outputs probabilities, prediction sets are usually not the first option that come to mind.

Many think of calibration as making sure that the output probabilities match the actual probabilities. For example, a 90% classification score should imply that 9 out of 10 times the true class was predicted correctly.

Conformal prediction can also tackle this form of calibration with so-called Venn-ABERS predictors. Staying true to conformal prediction, Venn-ABERS predictors don’t just adjust the probability scores but provide a range of probabilities.

Regression

For regression we’ve seen two approaches: Turning a point regression into a prediction interval:

And also turning a range between the output of two quantile regression models into a conformalized quantile interval:

Both are implemented in MAPIE.

Time Series Forecasting

Time series forecasting can be seen as a form of regression, but with a time structure.

⚡️ That’s a problem ⚡️

While conformal prediction is called “distribution-free” it still needs the assumption of exchangeability. However, in time series, due to the order of the data points, calibration data and new data aren’t exchangeable.

However, conformal prediction can still when using a weighting function in the calibration step. These weights are proportional to how similar the underlying distributions of two data points are. The more distribution shift happened over time, the smaller the weights.

This weighting-by-distribution-shift approach cannot only be used for time series but for all scenarios where you have a covariate shift or — even worse — a distribution shift that includes the target. Time series forecasting is implemented in MAPIE

Outlier Detection

Wait, that’s an unsupervised learning task! Conformal prediction doesn’t care. An outlier detection model is a function, and even if you don’t have labeled data (outlier vs. non-outlier), then you can still control the false positive rate. The idea: you only keep the data points that are exchangeable with the calibration data. As a non-conformity score you use whatever the underlying outlier detection model outputs.

Image Segmentation

In image segmentation, the input is an image and the output is an image mask that has the same size as the image with 1’s indicating pixels of the segment. With conformal segmentation, we can guarantee that the average fraction of pixels of the true segment that are missed is lower than ɑ.

And Many More tasks

…

How To Stay Up To Date

There’s an explosion of research in conformal prediction. Just have a look at this list and see how many new conformal prediction papers came out in 2020 and after. So here are a few tips on not getting lost:

Read or at least skim the paper A Gentle Introduction To Conformal Prediction and Distribution-Free Uncertainty Quantification. I learned so much from this academic tutorial and I relied a lot in this paper for this course.

Check out the Awesome Conformal Prediction repo, which is probably the most complete resource on conformal prediction. If you find it useful, you can even cite it in your paper or project. Don’t forget to star it on Github.

Follow Valeriy, the author of the awesome conformal prediction repo, on Twitter and LinkedIN. He’s on a mission to make conformal prediction popular and I found especially his Twitter the best source for new research on conformal prediction.

Haven’t found the right CP algorithm for your application? You can implement your own conformal prediction algorithm!

How to build your own conformal predictor will be the topic for the next week. Even if you don’t plan to implement one yourself, thinking this process through is very educational.

Course Outline

Check out my book on Conformal Prediction which provides hands-on examples to quantify uncertainty in your application!

Christoph, thanks for the couse. It is really helpful.

I wonder why MapieClassifier assumes multiclass classification ( hence does not support binary classification). Would you know that?

Cheers,